Basic operation takes time proportional to height of the tree in case of balanced tree.

for complete binary tree with n nodes has worst case of Th(log n)

for linear chain of n nodes it behaves like linked list and has worst case of Th(n)

Can be used as dictionary and priority queue

Properties of Binary Search Tree

All nodes in the left subtree of parent must be no greater than parent that is key[left] <= key[parent]All nodes in right subtree of parent must be no smaller than parent that is key[right] >= key[parent]

This property of binary search tree allows us to find sorted ordering in simple way by using inorder traversal

Tree Traversals

Process of visiting each node once.

Traversals are classified by the order in which root node is visited

1) Depth first traversal explores as far as along each node before backtracking

preorder (root left right)

inorder(left root right) gives ascending sorted order

postorder (left right root)

2)Breadth first traversal

examining nodes at each level before moving to next level

Binary tree traversal implementation with Recursive procedure and auxiliary data structure require stack space proportional to the height of the tree. In a poorly balanced tree, this can be quite considerable.

Stack requirement can be removed by threading the tree, which will improve the inorder traversal alone.

Preorder traversal------------Th(n)

PREORDER-TRAVERSE(tree)

if (tree not empty)

visit root of tree

PREORDER-TRAVERSE(left subtree)

PREORDER-TRAVERSE(right subtree)

Time complexity of recursive traversal

consider n-node tree

T(n) = T(left)+ T(right) + d

T(n)=T(k)+T(n-k+1)+d

T(n) = Th(n)

Example Consider tree

j <-- level 0

/ \

f k <-- level 1

/ \ \

a h z <-- level 2

\

d

inorder traversal a d f h j k z

preorder traversal j f a d h k z

postorder traversal d a h f z k j

breadth traversal j f k a h z d

Reconstruction Property of Binary Search Tree

A unique binary search tree is possible from its its inorder, preorder and inorder, postorder but not from preorder and postorder. All the three a possible in case of full binary tree.

Example For 4 nodes draw a tree that gives same preorder and inorder traversal

It will have no left childrens

a

\

b

\

c

\

d

is the same possible with postorder and inorder traversal ?

Traversal with explicit Stack

Every recursive call is Push operation and visit is print operation

The state of stack before first pop for

1) inorder

|_a _|

|_f__|

|_j__|

as in recursive call we call inorder(j) then on its left inorder(f) and then inorder(a) then print(a) ...

2) post order

|_a _|

|_f__|

|_j__|

3)preorder

|_ _|

|_ __|

|_j__|

before first pop

|_ _|

|_k _|

|_f__|

after first pop

Tree - Successor (x) ----- O(log n)

successor in sorted order determined by inorder traversalwe need to find successor of node x

case1 : If the right subtree of x is not null then need to traverse down and find

Tree-Minimum(right(x))

case2 : right subtree of x is null then successor of x is

lowest ancestor of x whose left child is also an ancestor of x i.e

y = parent(x)

while(y!=null and x=right(y))

x = y

y = parent(y)

return y

Example Consider tree below and we want to find successor of node 13

15

/

6

\

7 <-------y

\

13 <-------x

since right(y) == x so we move into loop

After first loop

15

/

6 <-------y

\

7 <-------x

\

13

here also right(y) == x

After second loop

15 <-------y

/

6 <-------x

\

7

\

13

right(y) which is null != x so we get 15 as ancestor of x

Time Complexity of Tree-Successor(x)

big O(h)

Inorder Traversal without using stacks

If each node stores reference to parent then traversal implementation is possible without stack

or visited flag. When we don't have parent pointer we need to use Threaded binary tree.

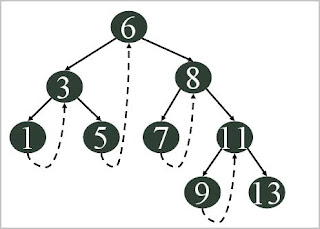

Right threaded binary

We need to know if a pointer is an actual link or a thread, so we keep a boolean for each pointer.Traversal

1) We start at the leftmost node in the tree, print it, and follow its right pointer

2) case 1 If its thread to the right, we output the node and continue to its right

case 2 If we follow a link to the right, we go to the leftmost node, print it, and continue

Example

consider below right threaded tree

1)we start at leftmost node i.e 1, print it and follow right pointer

2)right pointer is thread so we output 3 and move to its right

3)right pointer is link now to node 5 so we follow the leftmost node of 5 which is none so we print 5 and follow its right pointer and so..

Algorithm

x = leftmost(n);

while (x != null)

print x

If (right(x) = thread)

x = x.right;

else

x = leftmost(x.right);

Advantages

Binary trees have a lot of wasted space: the leaf nodes each have 2 null pointers.We can use these pointers to help us in inorder traversals

Insertion and Deletion

Deletion---- O(logn)

Tree-Delete( T,z)z is pointer to node of Tree T to be deleted

There are 3 possibilities

- deleting leaf node we modify the parent of z to replace z will null

- deleting a node with single child, splice z by making anew link between its child and its parent

- deleting a node with two children we splice out z, do not delete z instead choose either its inorder successor or predecessor and replace z with this and then delete successor

- or for two children we can say delete smallest key in the right subtree or largest one in the left subtree

Running time of deletion

running time of deletion procedure depends on Tree-successor which takes O(h) time

So running time of deletion is O(log n)

Insertion ------------ O(log n)

Tree-Insert(T,z)

point y to parent of each node

x to current node

while x!=null // traverses tree downwards takes O(h)

if key[z] < key[x]

x=left[x]

else x=right[x]

at end y will hold the node where to insert z

p[z] = y

If y=null //means tree is null then create root

root[T]= z

else

set z as appropiate left or right child of y

Running Time

takes O(h) time

Build BST---------worst O(n^2)

In the worst case it takes O(n^2) when we have sorted list so all goes one after other in tree

making height of tree n

if we use self balanced tree it can be performed in O(nlogn)

Sorting ------worst O(n^2)

Build BST

perform Inorder traversal

worst case that of build procedure O(n^2)

poor cache performance and overhead of space

most efficient for incremental sorting

Range(a,b)

returns range of values a<=n<=b

find a and b in the tree

Let Pa and Pb be the search path from root to a and b resp.

Let x be the node where pa and pb split

for every node v in the path a tox

if v > a

print v

inorder(right.v)

for every node v in the path b to x

if v < b

print v

inorder(left.v)

Total time

finding the paths Pa and Pb takes at most O(h)

each inorder takes O(n) for n node tree

here we have k elements so O(k)

O(h+k)

Check the sequence if possible on searching

build the bst by placing larger on right and smaller on left

now check for each node every node in right subtree should be greater and in left should be small

Example

935, 278, 347, 621, 299, 392, 358, 363

isn't a possible sequence because 299 can't be in the right sub-tree of 347.

Problem Two binary search tree T1 and T2 with distinct nodes, each node in T1 is small than node in T2

Time complexity to merge T1 and T2 and height of new BST

Solution

If height of T1, h1, is greater than h2 then

find the largest element v of T1 and make it root

v= Find-Max(T1) //O(h1)

v.right =T2

v.left=T1

since maximum of T1 is less than T2 and will be greater than remaining T1

similarly when h2>h1 we can

Find-Min(T2) and make it root in time O(h2)

In both cases running time is O(min(h1,h2)) height of tree would be O(max(h1,h2)+1)

Tree Rotation

changes the structure without interfering with the order of the elements.

node node moves up(smaller subtree) other down(larger subtree)

Right rotation

a right rotation b

/ \ -------------- / \

b c d a

/ \ / \

d e e c

AVL Tree

self balancing binary search tree

height of two child subnodes of any node differ by at most 1 or balance factor 1 0 -1

balance factor = height of left sunode - height of right

After each basic operation we perform rotations to keep tree balanced

Height of AVL tree

g(h) number of nodes in the worst case the minimum number of nodes a AVL tree can have

g(h) = 1+ g(h-1)+g(h-2)

where g(0) = 1 and g(1)=2

interms of fibonacci sequence its

g(n) = f(n+2) -1

height of AVL tree is < 1.44 * log (n+2) - 1

Height of Red black tree is at most 2log(n+1)

Problem what is minimum number of nodes in AVL tree of height 10

Solution we know g(0) =1 and g(1) =2 and g(h) = g(h-1)+g(h-2) +1

so g(2) = 1+2+1 =4, g(3) = 7,g(4) = 12,g(5)=20,g(6)=33,g(7)=54,g(8)=88,g(9)=143,g(10)=232

Look up

look up is similar to unbalanced BST

since here its balanced in the worst also it takes O(log n)

Insertion

need to check ancestors for balance, if it becomes +- 2 we need to balance it

i)right-right case : when balance factor p for node is -2 that is right child outweighs

check balance factor of right subchild (R )

if p(R) <= 0 then left rotation with P as root

if p(R)= +1 then double rotation wrt to P is needed first with R as root and next with P as root

ii) left-left case when balance factor for node P is +2 that is left outweighs